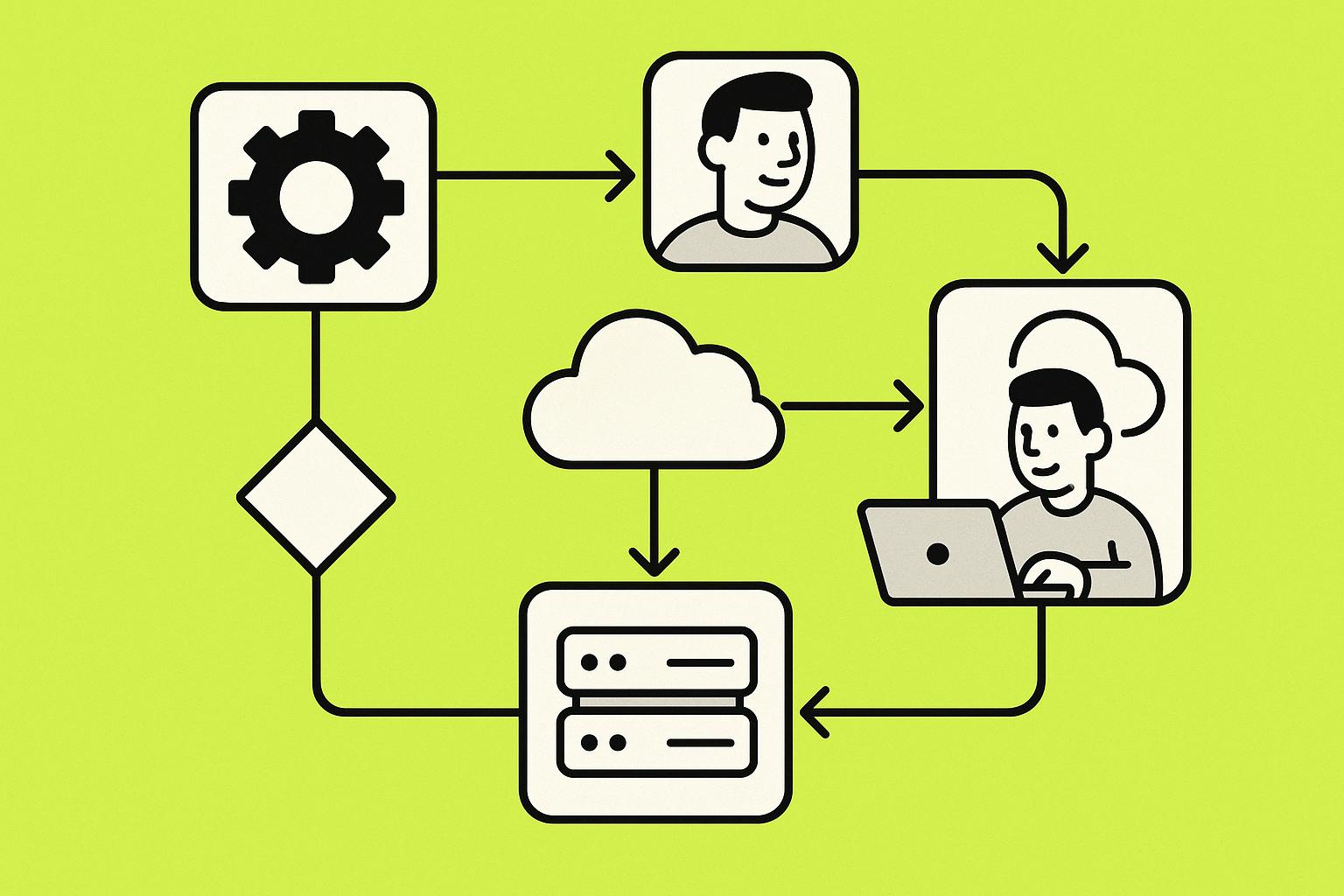

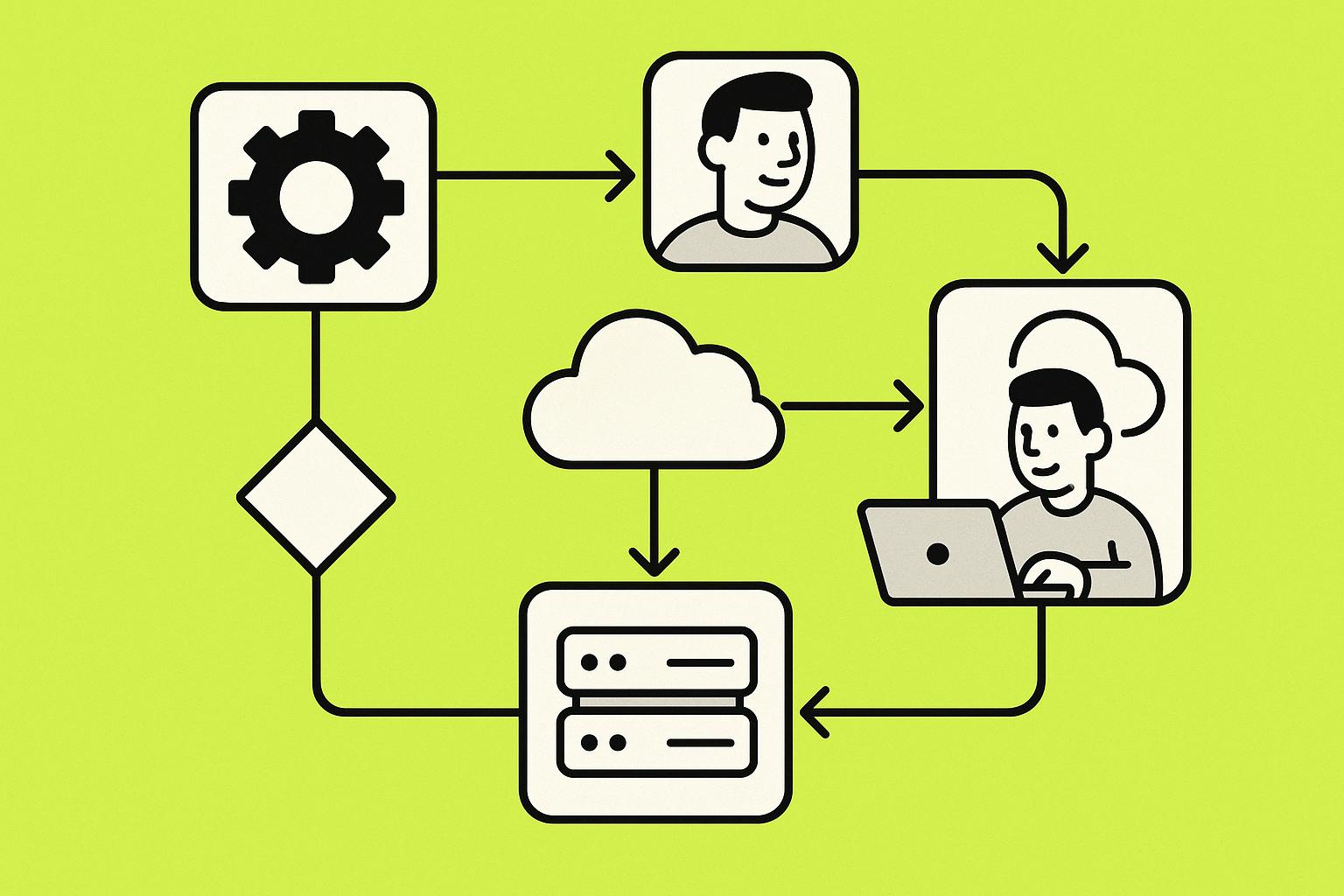

Deploying machine learning (ML) models quickly and reliably is essential for businesses to stay competitive. Here are five strategies to streamline ML deployment and ensure success:

These methods balance speed, risk, and infrastructure requirements, enabling teams to deploy models faster while maintaining reliability.

| Strategy | Speed | Risk Level | Infrastructure Needs | Best For |

|---|---|---|---|---|

| Shadow Deployment | Moderate | Low | High – traffic mirroring | Testing major updates safely |

| Blue-Green Deployment | Fast | Medium | High – duplicate setups | Zero-downtime critical applications |

| Canary Deployment | Gradual | Low | Moderate – routing tools | Gradual updates with user feedback |

| Batch and Real-Time | Varies | Medium | Batch: Low; Real-time: High | Analytics (batch) or instant decisions (real-time) |

| Automated Monitoring | Fast | Medium | High – MLOps infrastructure | Frequent data changes or evolving trends |

Each approach fits specific needs, from risk-sensitive industries like healthcare to dynamic sectors like e-commerce. Align your choice with your goals and resources to deploy ML models effectively.

Shadow deployment, also known as a dark launch, allows you to test a new machine learning (ML) model alongside your existing production model. Both models process the same incoming requests, but only the current model's outputs are used in live operations. The new model's outputs are logged for internal evaluation, ensuring real-world testing without impacting live predictions.

"Shadow deployment consists of releasing version B alongside version A, fork version A's incoming requests, and send them to version B without impacting production traffic."

This method can be implemented in different ways. At the application level, inputs are sent to both models, and their outputs are logged separately, while only the current model's results are returned to users. At the infrastructure level, traffic is duplicated using load balancers, directing requests to both models. A champion/challenger framework is often used here, enabling multiple new models to be tested against the current one simultaneously. Companies like Twitter, Airbnb, Baidu, and Bytedance utilize tools like Diffy to compare model responses during such deployments.

Shadow deployment offers a moderate pace for rolling out new models. While setting up the infrastructure to run two models concurrently requires some upfront effort, it eliminates the need for prolonged staging or approval processes later. Once the system is in place, new models can be tested continuously. A recommended approach is to begin with a small percentage of traffic and gradually increase it, ensuring the system can handle the added load. This method allows for ongoing evaluation without disturbing live production.

One of the biggest advantages of shadow deployment is its low-risk nature. Since only the outputs of the current live model are visible to users, any issues or bugs in the new model remain isolated. This setup provides a safety net for identifying potential problems, performance issues, or bottlenecks before the new model is fully deployed.

Shadow deployment requires substantial infrastructure since it involves running two full model-serving environments. Key components include traffic duplication tools, isolated environments for testing, data consistency mechanisms, comparison analytics, and performance monitoring systems. Automated frameworks that synchronize and verify data can streamline operations, and using mock interfaces for non-critical integrations can simplify the process while maintaining essential connections.

This approach is ideal for major model upgrades, such as replacing core algorithms or transitioning from legacy systems to modern frameworks. It’s particularly valuable in industries like healthcare, retail, and hospitality, where model errors could have serious consequences. Shadow deployment is also a great choice for performance tuning and stress testing under real-world production loads.

Blue-green deployment takes a different approach compared to shadow deployment by using two separate but identical environments. This method ensures a seamless transition between the current and updated models. It involves two mirrored setups: the "blue" environment handles live traffic, while the "green" environment is used for testing the new machine learning (ML) model. This way, you can test and validate the new model in conditions identical to production before redirecting user traffic to it.

Here’s how it works: all incoming traffic initially flows to the blue environment. Meanwhile, the updated model is deployed and tested in the green environment. Once the green setup meets performance expectations, traffic is switched to it. The blue environment remains on standby for an immediate rollback if any issues arise.

Amazon SageMaker leverages this strategy to maintain high availability for its endpoints, showcasing how effective blue-green deployment can be in ensuring uninterrupted service.

One of the standout features of blue-green deployment is its ability to switch traffic instantly once the green environment is ready. Unlike rolling deployments, which update components gradually, this method redirects all traffic at once. Although setting up two complete environments takes more upfront effort, it allows for thorough testing without the pressure of immediate deployment, making the eventual switch both quick and reliable.

This approach excels in minimizing risk thanks to its built-in rollback option. However, the instant traffic switch means rigorous testing is non-negotiable. By replicating production conditions in the green environment, you significantly reduce the chances of encountering issues post-deployment. If something does go wrong, the blue environment is ready to take over immediately, ensuring minimal disruption.

Implementing blue-green deployment requires doubling your production infrastructure. This means maintaining duplicate servers, load balancers, and databases for both the blue and green environments, which can drive up operational and maintenance costs. To make this work effectively, you’ll need automated pipelines, dynamic traffic routing, and robust monitoring tools. Using Infrastructure-as-Code (IaC) and automated provisioning tools ensures that both environments are configured identically. This method is ideal for organizations prepared to invest in high-grade, production-level infrastructure.

Blue-green deployment shines in mission-critical ML applications where uptime and quick recovery are non-negotiable. It’s particularly well-suited for major model updates that could have a significant impact on user experience or business operations. Industries like financial services and e-commerce, where downtime can be costly, often benefit from this approach.

If you need to thoroughly validate a new model before release but also want the ability to switch to it quickly once it’s confirmed to perform well, blue-green deployment is an excellent choice. It’s best for organizations that can handle the additional infrastructure costs and prioritize maintaining seamless operations.

The term "canary deployment" comes from the old mining practice of using canary birds as early warning systems for toxic gases. Similarly, in this strategy, you release a new model to a small group of users while the majority continues using the stable version. It’s a cautious way to roll out updates.

"Just as the canary bird would alert miners to toxic fumes while they worked, a canary deployment is used by MLOps and others to detect issues in their new CI/CD process releases." - Wallaroo.AI

Here’s how it works: a small percentage of user requests - say 10% or 25% - are routed to the new model. You monitor its performance against the stable version. If the results are positive, you increase the traffic gradually. If issues arise, you can quickly revert to the stable version without impacting most users.

A great example of this in action is Facebook’s approach in March 2021. They used multiple stages of canary deployment: first with internal employees, then with a small group of external users, and finally scaling up to their entire user base.

Canary deployment strikes a balance between speed and caution. While it’s not as immediate as blue-green deployment, it provides real-world performance data within hours of releasing to the canary group.

Because the rollout is incremental, you don’t have to wait for lengthy testing phases before users experience the changes. However, full deployment takes more time since traffic percentages are increased gradually. This makes it a great choice when you need to move quickly but can’t risk significant disruptions.

"Canarying allows the deployment pipeline to detect defects as quickly as possible with as little impact to your service as possible." - Google SRE

This approach is excellent for reducing risk. By exposing only a small group of users to the new model, any problems are contained. The gradual rollout helps uncover issues before they affect the entire user base.

The key to minimizing risk lies in setting clear success criteria ahead of time. Metrics like error rates, response times, and user engagement thresholds determine whether to proceed or roll back. Unlike shadow deployment, which focuses on internal metrics, canary deployment provides real user feedback, making it easier to catch issues that might not show up in test environments.

If something goes wrong, rolling back is simple because the stable version is still handling the majority of traffic. This makes canary deployment particularly valuable for major updates where you’re not entirely confident in the new model.

Compared to blue-green deployment, canary deployment is more cost-efficient since it doesn’t require a duplicate production environment. Both the stable and canary versions run simultaneously, but the canary version only needs resources for the smaller traffic percentage it handles.

To make this work, you’ll need robust monitoring tools like Prometheus or Grafana to track performance metrics in real time. Automated CI/CD pipelines are essential for managing traffic routing and rollbacks, and load balancers must be capable of directing traffic based on percentages.

Database management is relatively straightforward since both versions share the same data sources. However, you’ll need to monitor database interactions closely to ensure the canary model doesn’t negatively impact the stable version’s performance.

Canary deployment is ideal when introducing major changes while maintaining system stability. It’s particularly useful for experimental features, significant algorithm updates, or when working with older systems that are hard to replicate in test environments.

This approach is also great for addressing performance or scaling concerns with your new model. The gradual rollout allows you to observe resource usage and response times under real-world conditions before committing fully. It’s especially suitable for organizations that prioritize user experience and can’t risk widespread issues, even temporarily.

If you’re okay with a slower rollout and want thorough real-world testing, canary deployment is a smart choice. It’s particularly effective for machine learning models, where performance can vary widely depending on actual user behavior - something often hard to replicate in testing. This gradual rollout complements other deployment strategies discussed later.

When deploying machine learning models, timing plays a crucial role in how predictions are made. Batch and real-time deployment strategies focus on when predictions happen rather than how models are rolled out. Batch deployment processes data at scheduled intervals, while real-time deployment handles incoming data as it arrives, offering immediate responses.

With batch deployment, large datasets are analyzed periodically. Models process historical data in chunks, generating predictions that can be stored and accessed later. On the other hand, real-time deployment operates with minimal delay, making predictions instantly as new data flows in.

Choosing between these methods depends on whether your application needs immediate responses or can function with periodic updates. For instance, Amazon uses batch deployment to generate product recommendations, caching them for fast retrieval. Meanwhile, UberEats calculates delivery time estimates in real time when an order is placed. This decision impacts not only speed and risk but also infrastructure needs and specific use cases, complementing earlier rollout strategies.

The timing of predictions significantly affects system performance and reliability. Batch deployment offers predictable processing speeds but works on a delayed schedule. Predictions are updated at fixed intervals, which naturally introduces some lag. In contrast, real-time deployment delivers predictions almost instantly, meeting strict millisecond-level latency requirements. However, processing single requests in real time is less efficient than batch processing.

Batch systems can take advantage of powerful computing resources during off-peak hours, making them cost-effective. Real-time systems, however, require continuous 24/7 performance, ensuring they can handle requests at any moment.

Batch deployment is generally less risky because it’s easier to monitor and manage. If an issue arises, you can fix it and rerun the job without affecting live users. Real-time deployment, by comparison, carries higher risks due to its complexity and the immediate impact on users. Failures in real-time systems - whether from model errors or performance bottlenecks - can directly affect user experience. These systems must process data and deliver predictions within tight timeframes, often in milliseconds.

"ML model deployment can take a long time, and the models require constant attention to ensure quality and efficiency. For this reason, ML model deployment must be properly planned and managed to avoid inefficiencies and time-consuming challenges." - Pavel Klushin, Head of Solution Architecture, Qwak

Real-time systems also face heightened security concerns. They require robust authentication, authorization, and secure communication protocols to mitigate risks.

Batch deployment is less demanding when it comes to infrastructure because low latency isn’t a priority. It can use cost-efficient storage solutions and schedule processing during times of lower demand. Real-time deployment, on the other hand, requires a sophisticated, low-latency infrastructure to handle high data volumes and computational loads. This includes powerful hardware, redundancy systems, and advanced monitoring tools. Additionally, feature engineering from streaming data can be resource-intensive.

Real-time systems must also scale dynamically to handle traffic spikes while keeping costs under control. Cloud platforms can help scale resources automatically, but managing expenses remains a critical challenge.

Batch deployment is ideal for applications where some delay is acceptable. It’s well-suited for tasks like generating daily reports, analyzing historical trends, customer segmentation, or creating financial forecasts. If your application doesn’t require instant updates, batch deployment is a practical choice.

Real-time deployment, however, is crucial for applications that demand immediate decision-making. Fraud detection systems, for example, need to flag suspicious transactions instantly. Recommendation engines must adapt quickly to user behavior, and autonomous vehicles rely on split-second decisions. Real-time deployment shines in situations where the benefits of immediate predictions outweigh the costs of maintaining continuous infrastructure.

Many organizations find success by combining both methods. Batch processing is often used for complex analytical models, while real-time inference powers user-facing features. This hybrid approach helps balance cost, latency, and performance. Ultimately, aligning the timing of deployment with your business needs is key to a smooth and efficient operation.

Building on earlier deployment strategies, automated monitoring ensures that machine learning models remain effective, even as conditions change.

Automated systems for model monitoring and retraining constantly track performance and step in when results start to slip. Unlike manual methods, where support teams have to keep an eye on model behavior, automated tools leverage MLOps to identify problems and trigger retraining without human input. These systems monitor factors like data drift, accuracy, and prediction quality, and they automatically initiate retraining when predefined thresholds are exceeded.

This approach not only minimizes human error but also creates consistent and repeatable processes for model training. When models detect shifts in incoming data or performance dips below acceptable levels, they can retrain themselves using updated data. This ensures that models stay aligned with changing data trends and business needs.

Automated monitoring and retraining significantly speed up deployment cycles by cutting out manual delays. In traditional setups, data scientists have to monitor dashboards, analyze metrics, and manually initiate retraining. Automated systems, on the other hand, can recognize performance issues within hours or days and act immediately, bypassing the need for human intervention.

Once the system identifies a performance issue, it can deploy updated models without waiting for manual approvals. This continuous cycle of detection and retraining ensures models remain accurate and relevant, avoiding the lag that often comes with manual processes.

That said, setting up these systems does require an initial investment of time. Teams need to define acceptable performance levels, configure retraining triggers, and establish workflows before they can enjoy the faster deployment speeds.

Automated systems bring both benefits and challenges. On the upside, they reduce the risk of human error and ensure round-the-clock monitoring. Unlike manual monitoring, which might miss issues during off-hours or when staff are unavailable, automated systems provide consistent coverage.

For example, during the 2020 pandemic, many machine learning models struggled with sudden shifts in customer behavior and economic conditions. A UK bank survey from August 2020 revealed that 35% of bankers noticed negative impacts on model performance due to these changes. Automated systems could have responded to these disruptions more quickly than manual processes.

However, automation isn’t without its risks. Poorly set thresholds can lead to unnecessary retraining, wasting resources and potentially harming model performance. Additionally, if the data used for retraining contains biases or errors, automated systems can amplify these problems. To mitigate these risks, teams need to implement robust data validation and model checks to ensure the system operates as intended.

Managing these risks requires an infrastructure that is as reliable as the automated processes themselves.

Automated monitoring and retraining systems need a strong infrastructure to handle tasks like data collection, storage, processing, model training, and deployment. Essential components include reliable storage systems, databases, and ML pipeline tools. Even for small-scale operations, the costs for maintaining this infrastructure - spanning model support, data management, and engineering - can range from $60,750 to $94,500.

Key tools include monitoring dashboards for tracking model performance, feature stores for consistent data access, and metadata systems for organizing information. Depending on the use case, the system may need to support both batch and real-time monitoring. Real-time setups involve continuous data streaming and metric calculations, while batch monitoring evaluates performance periodically.

"MLOps is an ML engineering culture and practice that aims at unifying ML system development (Dev) and ML system operation (Ops). Practicing MLOps means that you advocate for automation and monitoring at all steps of ML system construction, including integration, testing, releasing, deployment and infrastructure management."

Organizations can choose between cloud-based, on-premises, or hybrid infrastructures based on factors like cost, scalability, and security. Cloud platforms offer automatic scaling but require diligent cost management. On-premises solutions provide more control but come with higher upfront costs and ongoing maintenance needs.

Automated monitoring and retraining are particularly useful in environments where data patterns shift frequently. Use cases like fraud detection and search engine algorithms benefit greatly from these systems. These applications face constant challenges from evolving threats or changing user behaviors, which can quickly render models outdated.

Financial services are a prime example. Credit scoring models must adjust to economic shifts, while fraud detection systems need to adapt to emerging attack strategies. Similarly, e-commerce recommendation engines thrive on automation, as they need to keep up with fluctuating customer preferences and inventory changes.

Manufacturing is another area where automation shines. Predictive maintenance systems, for instance, can retrain themselves on new sensor data to identify developing failure patterns. As machinery ages or operating conditions evolve, automated retraining ensures predictions remain accurate.

"Without a way to understand and track these data (and hence model) changes, you cannot understand your system." - Christopher Samiullah, Deployment of Machine Learning Models

To address performance issues and prediction failures, teams should implement logging, alerting, and automated retraining. The key lies in setting clear monitoring objectives and choosing the right metrics for evaluating model quality, data drift, and data reliability.

Automated systems work best when organizations already have stable MLOps processes and well-defined performance goals. For teams still developing their machine learning capabilities, starting with manual approaches and gradually introducing automation can be a more practical path. With automated monitoring and retraining in place, teams can maintain the rapid, reliable deployments necessary to stay ahead in competitive environments.

This table provides a concise overview of five deployment strategies, helping you align their features with your specific needs. It outlines key aspects like deployment speed, risk level, and infrastructure requirements to assist in decision-making.

| Strategy | Speed of Deployment | Risk Level | Infrastructure Requirements | Best Use Cases |

|---|---|---|---|---|

| Shadow Deployment | No user impact during testing | Low – no user exposure during validation | Replica of live system, traffic mirroring capabilities | Safely testing new models, validating performance before launch, critical industries like healthcare |

| Blue-Green Deployment | Fast – instant switchover once tested | Medium – all users exposed simultaneously but quick rollback available | Two identical full environments running in parallel | Zero-downtime needs, critical apps requiring immediate rollback, financial services |

| Canary Deployment | Moderate – gradual rollout takes longer | Low – limited initial user exposure | Advanced traffic routing and monitoring tools within the same environment | Gradual feature rollouts, user feedback collection, e-commerce platforms |

| Batch and Real-Time Deployment | Varies – batch is slower but efficient; real-time is immediate | Medium – depends on monitoring and validation processes | Batch: cost-effective scaling; Real-time: high-performance computing infrastructure | Batch: data analytics, reporting; Real-time: fraud detection, recommendation engines |

| Automated Monitoring and Retraining | Fast – eliminates manual retraining delays | Medium – reduces human error but needs robust validation | Comprehensive MLOps infrastructure, monitoring dashboards, feature stores | Environments with frequent data changes, fraud detection, predictive maintenance |

The choice of deployment strategy depends heavily on your team’s experience, regulatory obligations, and available resources. For instance, well-established teams with mature MLOps practices may benefit from automated monitoring and retraining for efficiency. On the other hand, smaller teams or those new to machine learning deployment might find canary or blue-green strategies more approachable. Industries like healthcare and finance often lean toward shadow or canary deployments to mitigate risks and ensure thorough validation. Aligning your deployment approach with your operational goals and constraints is crucial for success.

Choosing the right machine learning (ML) deployment strategy can speed up solution delivery while keeping operational risks in check. The five strategies we've discussed - shadow, blue-green, canary, batch and real-time, and automated monitoring - are tailored to fit different team capabilities, infrastructure setups, and risk tolerances.

When implemented effectively, these approaches can significantly improve efficiency. Incorporating deployment strategies into MLOps practices can cut development timelines from months to just weeks or even days. This speed is critical, especially with the global MLOps market expected to grow from $1.22 billion in 2022 to $34.64 billion by 2032, with a projected annual growth rate of 39.74%.

"MLOps introduced a structured, reliable way to build, deploy, and maintain machine learning models in production." – Webelight Solutions Pvt. Ltd.

Real-world results back this up. For instance, EY achieved a 40–60% reduction in false positives by adopting an optimized deployment strategy. These examples highlight how the right approach can improve both performance and speed.

However, success isn’t just about technical execution. A well-rounded strategy requires the right expertise and a methodical implementation process. While 92% of companies plan to increase their AI investments over the next three years, only 48% of AI projects actually make it to production, according to Gartner. This gap between ambition and execution underscores the challenges organizations face.

To address these challenges, solutions like 2V AI DevBoost provide structured support. Their 5-week sprint approach has helped teams achieve productivity gains ranging from 15% to 200%. For example, one team reduced pull request review times from 19 minutes to 10 minutes - a 48% improvement - while another cut unit testing time from 23% to 15% of total development time. These results show how tailored strategies can bridge the gap between planning and execution.

Ultimately, successful ML deployment goes beyond technical implementation. It requires collaboration across teams, robust monitoring systems, and ongoing optimization. Whether you choose shadow, blue-green, canary, batch/real-time, or automated strategies, the key is aligning your approach with your operational needs and long-term AI goals.

When deciding on a machine learning (ML) deployment strategy, several factors come into play. Start by assessing latency requirements, data privacy, security needs, budget limitations, and the infrastructure you have at your disposal. These elements will guide your approach and ensure your deployment aligns with your technical and operational constraints.

You’ll also want to think about scalability - can the solution handle growth? And don’t overlook the resources your team has for ongoing maintenance. The size and complexity of your ML project matter too, especially when considering how it will affect end-users. By tailoring your strategy to your organization’s goals and operational demands, you can achieve a deployment that balances efficiency with performance.

To streamline monitoring and retraining in machine learning setups, having the right infrastructure in place is crucial. This means setting up systems that handle data processing, model training, and deployment efficiently. Key components include automated CI/CD pipelines, distributed computing platforms like Kubernetes, and dependable storage solutions to handle large datasets seamlessly.

Equally important are monitoring tools that can keep an eye on model performance in real time. These tools should detect issues such as data drift or a drop in performance and automatically trigger retraining workflows when needed. By building this kind of setup, you can ensure your machine learning operations remain efficient, reliable, and ready to scale.

Shadow deployment and canary deployment take different approaches when it comes to balancing risk and speed during model testing.

Shadow deployment involves running the new model in parallel with the existing one, but without exposing it to users. This method is all about safety - it allows you to validate the model thoroughly without disrupting the user experience. However, because it doesn't involve real user interactions, it may take longer to gather actionable insights.

Canary deployment, by contrast, introduces the new model to a small subset of actual users. This approach carries some risk since it can directly impact the user experience, but it also delivers quicker, real-world feedback. If issues arise, adjustments or rollbacks can be made swiftly.

Deciding between these strategies boils down to your business priorities. If minimizing risk is your top concern, shadow deployment is the safer bet. But if speed and real-world testing are more critical, canary deployment is the way to go.